Video Frame Interpolation PyTorch version: https://github.com/sniklaus/pytorch-sepconv

Video Frame Interpolation Torch version: https://github.com/sniklaus/torch-sepconv

Discussion: https://www.reddit.com/r/MachineLearning/comments/6zhy7u/r_video_frame_interpolation_via_adaptive/

Paper – Video Frame Interpolation via Adaptive Separable Convolution: https://arxiv.org/abs/1708.01692

Standard video frame interpolation methods first estimate optical flow between input frames and then synthesize an intermediate frame guided by motion. Recent approaches merge these two steps into a single convolution process by convolving input frames with spatially adaptive kernels that account for motion and re-sampling simultaneously. These methods require large kernels to handle large motion, which limits the number of pixels whose kernels can be estimated at once due to the large memory demand. To address this problem, this paper formulates frame interpolation as local separable convolution over input frames using pairs of 1D kernels. Compared to regular 2D kernels, the 1D kernels require significantly fewer parameters to be estimated. Our method develops a deep fully convolutional neural network that takes two input frames and estimates pairs of 1D kernels for all pixels simultaneously. Since our method is able to estimate kernels and synthesizes the whole video frame at once, it allows for the incorporation of perceptual loss to train the neural network to produce visually pleasing frames. This deep neural network is trained end-to-end using widely available video data without any human annotation. Both qualitative and quantitative experiments show that our method provides a practical solution to high-quality video frame interpolation.

Similar papers:

- https://arxiv.org/abs/1609.05158 – Real-Time Single Image and Video Super-Resolution

- https://arxiv.org/abs/1611.05250 – Real-Time Video Super-Resolution with Spatio-Temporal Networks

- https://arxiv.org/abs/1704.02738 – Detail-revealing Deep Video Super-resolution

- https://arxiv.org/abs/1707.00471 – End-to-End Learning of Video Super-Resolution with Motion Compensation

Video demo: http://web.cecs.pdx.edu/~fliu/project/sepconv/demo.mp4

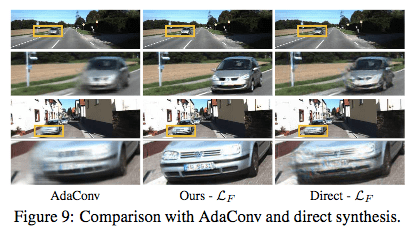

Video Frame Interpolation Comparison:

This paper presents a practical solution to high-quality video frame interpolation. The presented method combines motion estimation and frame synthesis into a single convolution process by estimating spatially-adaptive separable kernels for each output pixel and convolving input frames with them to render the intermediate frame. The key to make this convolution approach practical is to use 1D kernels to approximate full 2D ones. The use of 1D kernels significantly reduces the number of kernel parameters and enables full-frame synthesis, which in turn supports the use of perceptual loss to further improve the visual quality of the interpolation results. Our experiments show that our method compares favorably to state-of-the-art interpolation results both quantitatively and qualitatively and produces high-quality frame interpolation results.